Autonomous Curiosity

Why?

It is almost accepted as fact that deep learning algorithms require a large amount of training data. This inherently makes them difficult and time-consuming to create. How, then, can we enable algorithms that can adapt to new situations in real-time? Humans don’t have this problem. A human student, given limited direction from a teacher, can bootstrap his or her own knowledge. Good students exhibit both the ability to study (absorb the information given to them) and the ability to be curious (seek out new knowledge as needed). In order to learn effectively, a great student can study existing knowledge, be curious for new knowledge, and ask a teacher or mentor for guidance only when needed. How do we enable the same for deep learning algorithms?

What?

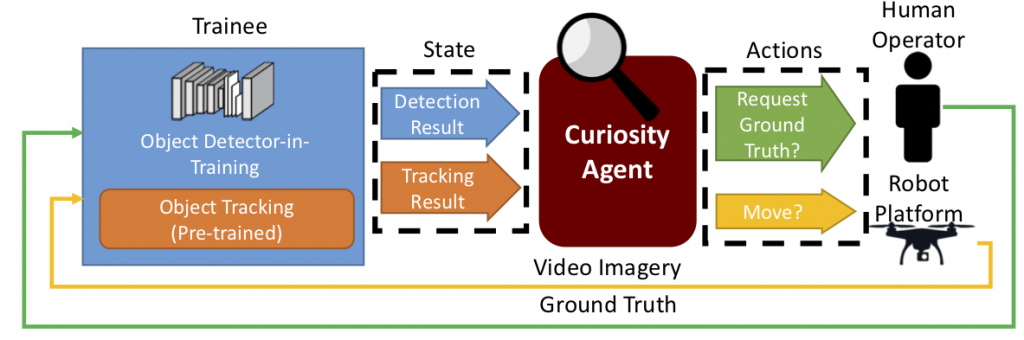

Consider the problem of a neural network-based object detection algorithm onboard a small unmanned aerial system (UAS). We would like this algorithm to be re-trainable to new subjects at the direction of a human operator but without requiring too much of the operator’s time. In ClickBAIT, we examined the problem of extracting as much useful information as possible from the human’s limited actions, i.e., study.

In Autonomous Curiosity, we examine the problem of curiosity by enabling the UAS itself to independently move around the world, looking for new imagery that will help it learn. In such an approach, a second neural network, the curiosity agent, has been pre-trained with knowledge of what makes a particular view of a subject useful for training. It directs the UAS to find those views that are useful and avoid those that are not. Building on VIPER, we can train this agent how to learn in the safety of simulation. This approach accelerates training beyond ClickBAIT alone, and alleviates the task of finding useful views from the pilot.