Core Concepts

The TickTalk system is made up of three elements: a language, a compiler, and a runtime environment. The runtime can be augmented to run as a simulation with a custom backend to simulate the physics of an environment and sensors/actuators that interact with it.

TTPython Language

The TTPython language is Domain Specific Language (DSL) based on Python3. The programmer uses Pythonic syntax to write their distributed time-sensitive application with custom syntax additions to help them specify synchronized clocks, streaming operations, deadlines & failure handlers, restrictions on where pieces of code can run and more.

The targeted applications in this domain are inherently parallel due to the distributed nature of the sensing, actuating, and processing hardware. The TTPython language compiles to a dataflow graph to make best use of this parallelism.

Operations within the TTPython program are nodes in this graph, and operate as functions with persistent (static) variables, but no direct memory sharing with other functions aside from returned values.

Compiling to Dataflow Graphs

The distributed, time-sensitive applications TTPython targets are inherently meant to run across a collection of (potentially heterogeneous) devices.

Dataflow graphs TTPython programs in their compiled form, as these effectively exploit parallelism and isolation properties necessary in well-constructed distributed applications. The compiled dataflow graph is constructed of nodes and arcs, the former of which we call Scheduling Quanta for historical reasons. Each Scheduling Quantum (SQ) represents a function to be executed, and the arcs represent implicit communication links between SQs.

In TTPython, we augment traditional tagged-token dataflow graphs with timing semantics to better facilitate stream generation, data fusion, and timely interaction with the physical environment. The result of this is a timed dataflow model of computation.

Follow these links for more information on the history of dataflow and on the compilation process.

The Scheduling Quantum

In TTPython, the fundamental unit of computation is the graph node. We borrow

the concept of the SQ as the embodiment of a graph node, and we further

adopt the firing rules of the MIT Tagged-Token Dataflow – but with

modifications to handle time-sensitive operations, as we’ll see shortly.

SQs capture the core notions of synchronization and computation and support

the concept of arbitrary mapping of units of computation onto actual computing

devices. Let’s consider the structure of a single TTPython SQ.

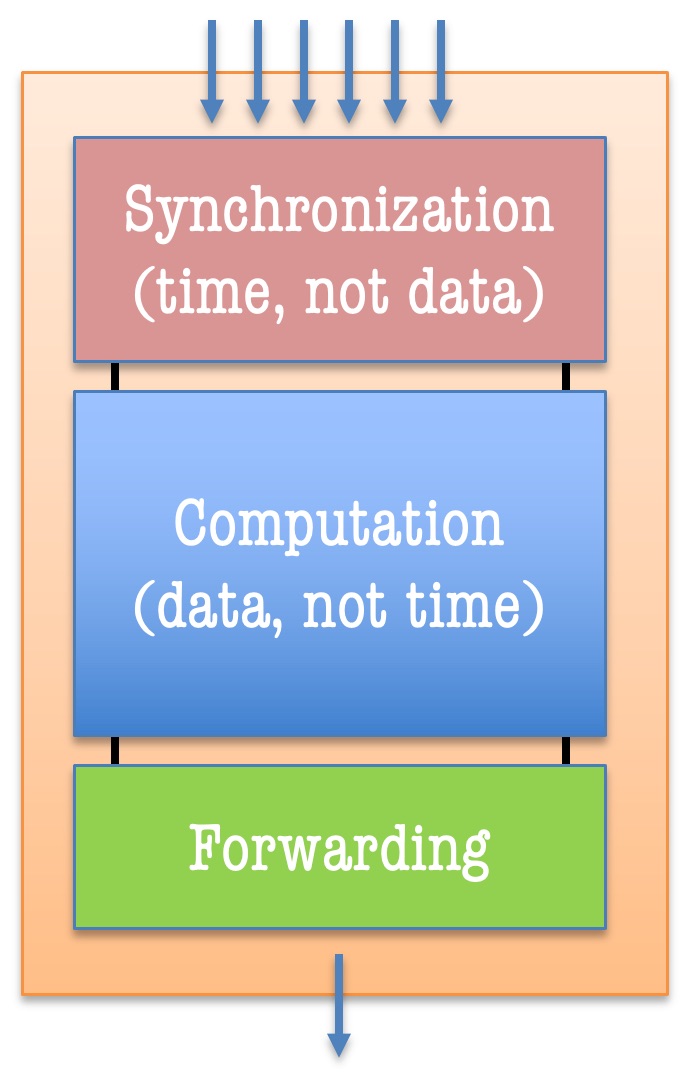

In the above image, the SQ is made up of three parts. In the first part,

inputs arriving from other SQs are collected and held until a “complete set”

is found; in other words, each SQ implements a synchronization barrier that is surpassed

when a firing rule is satisfied. The second part executes the code within an

SQ completion, producing output tokens. Third and finally, the outputs of the SQ

are forwarded to all SQs that will receive those output(s) as input.

In our form of timed dataflow computation, synchronization barriers use time-intervals to satisfy firing rules, primarily by searching for overlaps in time-intervals that suggest concurrency between sampled data values. The outputs generated by SQs that fire on values carrying overlapping time-intervals will themselves be given time-intervals equivalent to the intersection of those intervals. In practice, we implement a variety of similar time-cognizant firing rules for other primitive actions like setting deadlines or generating streams of sampled data. We’ll explore these in due time.

Implicit Communication Arcs

SQs behave like microservices whose communication links are implicitly created during the compilation phase. When SQs co-reside on the same device, there is no need to send them over the network, yet even when one SQ must send to another that resides elsewhere in the network, that communication is handled implicitly. The user need not specify any special communication protocol or format for the data communication.

The Runtime System

At runtime, the timed dataflow graph is interpreted across a distributed system of (potentially heterogeneous) devices. Here we describe a few key points about the hardware (or simulated hardware) elements of these devices (or Ensembles in our parlance).

The devices composing a TickTalk system may vary widely between servers, personal computers, mobile phones, industrial controllers, embedded systems, etc. It is difficult to apply one term to them all, given the wide variety of computing, memory, storage, time-keeping, sensing, and actuating hardware elements that may be contained within a single one. In this way, we call singular entities in TickTalk Ensembles of such hardware elements. The TTPython runtime, allows these to all work together on the common goal of interpreting the timed dataflow graph. The main requirement is that an Ensemble must be able to run Python3.

Ensembles may be physical or simulated devices, and a simulation backend can be plugged in handle the physics and its relation with the Ensembles.

Mapping SQs to Ensembles

Mapping SQs to Ensembles intelligently is no simple task. At a minimum, a programmer may specify hard restrictions on the mapping of their program, like running an acoustic waveform generator function on an Ensemble that has a microphone or similarly running an image-processing SQ on an ensemble with a GPU. When we refer to mapping, we mean finding a sufficient solution that satisfies the programmer’s hard constraints and reasonably satisfies other objectives. Example objective functions include minimizing power consumption on battery-operated devices and minimizing end-to-end latency between sensing and actuation. For these types of multi-SQ objectives the optimal solution is generally NP-hard. Moreover, this optimal solution may change due to nondeterministic effects like network congestion or CPU utilization.

It is worth reiterating here that TTPython is not a language for safety-critical real-time systems that must meet hard real-time requirements like worst-case execution time – TTPython relies on best-efforts that satistically meet the programmer’s intent.

Runtime Processing on Tokens

At runtime, the graph operates by sending value-carrying tokens between the SQs. In addition to values, each token carries a tag that helps the runtime determine how to use and where to send the token. This includes a destination (the SQ and its Ensemble host) and context (an application identifier and a time-interval).

The time-interval is most important, as it helps us decide which tokens are alike enough to process on together. For instance, two temperature sensors produce streams of data every hour. Intuitively, we would like to compare or combine those temperature that were collected at the same time, but it would be less intuitive to, say, average the temperature from one sensor collected at midnight with a sample from the other at 4 p.m. At the other extreme, finding strict equality in timestamps is both unlikely and unnecessary. A physical environment changes with time, not instantaneously, so samples of that have a period of validity. For this reason, but neglect to think of timestamps, but instead of time-intervals when searching for concurrency in preparation for stream fusion. This is the foundation for our time-based synchronization barriers, but as we’ll see, using intervals as timing primitives has many other practical applications.

This probably seems like a lot of information to take in, so let’s work through a more gradual introduction to how TTPython progams are written, compiled, and run by following our Tutorial